New Approaches, Old Method: Predicting IBNR with Machine Learning

Our team of actuaries and students presented this topic at Pinnacle University (Pinnacle U) in March 2021. Read more about Pinnacle U 2021 here.

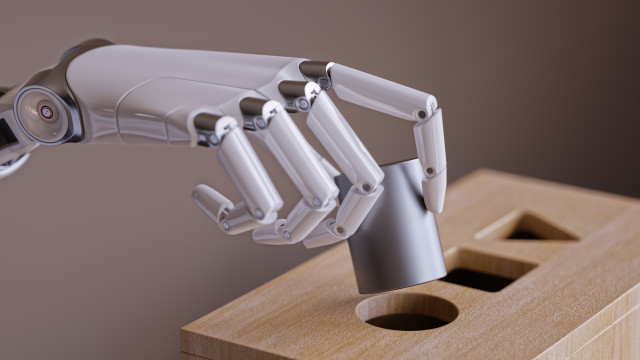

Machine learning is a branch of artificial intelligence (AI) that teaches a computer how to analyze and find hidden patterns in data through the use of algorithms. It’s been called a “revolution,” and from self-driving cars to health care, it has begun to change the way we live our lives. It is so prevalent, we often overlook how common it is. Just a few examples of the many uses of machine learning include image recognition, voice recognition, traffic prediction and language translation. The insurance industry, too, employs machine learning with driver performance monitoring, AI assistants, assessing damage from an accident through images and fraud prevention.

Inspired by topics found in “Machine Learning and Traditional Methods Synergy in Non-Life Reserving”1 and “The Actuary and IBNR Techniques: A Machine Learning Approach,”2 our Pinnacle University group explored the emerging world of machine learning and how it fits into the insurance industry. Specifically, we looked into the possibility of using machine learning to estimate the incurred but not reported (IBNR) losses of a group of commercial auto liability policies.

To do this, we wanted to first estimate ultimate losses and then subtract known incurred losses. Our chosen data set was Pinnacle’s analysis of industry data from 1990 to 2019. The data consisted of premium amount by year and of paid and incurred loss triangles by year at different months of maturity up to 10 years.

We organized this data into a data set where each observation consists of policy year, months of maturity up to five years, paid losses, case reserve losses, incurred losses and premiums as possible explanatory variables. Because very little development takes place after 10 years, especially for this line of coverage, we made the assumption that incurred losses at 10 years could simulate the ultimate losses as the response variable of our models. This assumption was necessary due to constraints of the data from which we built the data set.

With a variety of machine learning techniques available, we decided to focus on three types – Artificial Neural Networks (ANN), K-Nearest Neighbors (K-NN) and Random Forest – to determine which technique would be most accurate.

ANNs are computational systems that mimic the human brain and its neural structure to perform tasks. These networks are fed information to learn how to correctly identify patterns so they can make accurate predictions when presented with new data. Because of their nature, ANNs are used in both supervised and unsupervised learning.

While ANNs are conceptually simple to understand, they fit the classical definition of a “black box,” meaning it is difficult to explain how they make individual predictions from the input they are given. Researchers are still trying to understand what happens between input and output. ANNs require numerical inputs and substantial parameter tuning for reasonable responses. John S. Denker, a well-known researcher, said of neural networks, “a neural network is the second-best way to solve any problem. The best way is to actually understand the problem.”

Our group performed our IBNR analysis in R, where we used the incurred losses per development period as the only explanatory variable. We noticed some inaccuracy due to the high level of collinearity in the data, which is a condition in which the values of correlated variables tend to move in the same direction. To correct this problem, we tried to tune our activation function to inflate the predicted ultimate values and make better predictions, but the resulting models failed to converge.

The second machine learning type, the K-NN method, is a supervised, non-parametric ML algorithm that makes predictions based on summarizing the K data points that are most similar to it. In regression models, K-NN summarizes training data by taking the average of the K closest training responses as the prediction for the new observation. For a very low value of K, the model often overfits on the training data, which leads to a high error when making predictions. For a high value of K, the model often performs poorly on both training and testing data.

Our K-NN model was constructed in R using standardized data separated into a training set and a testing set to help determine the optimal K-value to use. We utilized every possible explanatory variable in the data set, giving us high error on the testing data. Collinearity likely affected our model, because we used incurred losses, paid losses and case reserve losses as explanatory variables. Removing incurred losses lowered the error substantially, as did testing to find the optimal K-value of three.

The basis of Random Forest, our third method, is rooted in decision trees – a supervised type of machine learning where splitting points are based on different parameters. Random Forest is the culmination of multiple decision trees gaining more accuracy in final predictions.

One advantage of Random Forest is that it does not require scaling or preprocessing of the data used in the model. This is useful when dealing with a large amount of data, which can be challenging to scale to function appropriately. On the other hand, one disadvantage of Random Forest is that overfitting in a model can easily occur.

We used R to implement the Random Forest method. There are two hyper-parameters we can tune: the number of trees used in the model and the number of variables sampled at each split or node. Testing different numbers of trees added no value to the predictions, so we used a standard value of 500. For the splitting variables, if we chose six variables to split off at each node, then overfitting the model would be a concern. We selected the standard algorithm of total variables (six) divided by three, so our chosen splitting variable was two. In the end, the results were not ideal, likely due to collinearity issues, but were the most favorable out of the three methods.

To compare the accuracy of all three machine learning models, we tested each on data not used for model development, the validation data sample. Specifically, we used our commercial auto liability data from 2015 to 2019.

Results were not ideal. When tested on the comparison data, the Random Forest model had the lowest error out of all three models attempted. Each model’s prediction had a large portion of unrealistic IBNR values, with many being negative. Negative IBNR is possible, but unlikely in the magnitude and frequency that we were predicting. Upon examining the new years of data, we believe these results should have been expected. The paid and incurred values in the newer periods became much higher, much faster than in the older years of data. In summation, the validation data that we tried to implement our models on was dramatically different compared to the data used to build our models, the training data.

There are a few conclusions to take away from this exercise. First, it is possible to adapt these machine learning techniques to function similarly to traditional actuarial models. In addition, these techniques produce reasonable IBNR estimates given the correct circumstances. However, further adjustments may be required to improve accuracy when warranted. This is an important lesson to keep in mind when working with machine learning modeling (and modeling in general): be sure to keep an eye out for inconsistencies in data development, and adjust modeling assumptions accordingly.

Joe Alberts is an actuarial analyst I with Pinnacle Actuarial Resources in the Chicago office. He holds a Bachelor of Science degree in mathematics with a minor in physics from Illinois Wesleyan University and has experience in assignments involving loss reserving, loss cost projections and group captives. He is actively pursuing membership in the Casualty Actuarial Society (CAS) through the examination process.

Tatiana Rodriguez is an actuarial analyst I in Pinnacle's Bloomington office. She holds a Master of Science degree in mathematics with a concentration in actuarial science from Illinois State University, and has experience in assignments involving loss reserving, loss cost projections, group captives and microcaptives. She is actively pursuing membership in the CAS through the examination process.

Clinton Aboagye is a graduate of Illinois State University’s (ISU) class of 2021 with a master’s degree in actuarial science. He is a recipient of the 2020 Midwestern Actuarial Forum Scholarship and served as a graduate research assistant and teaching assistant during his time at ISU.

1 Jamal, S., Canto, S., Fernwood, R., Giancaterino, C., Hiabu, M., Invernizzi, L., Korzhynska, T., Martin, Z., & Shen, H. (2018). Machine Learning and Traditional Methods Synergy in Non-Life Reserving (MLTMS).

2 Balona, C., & Richman, R. (2020). The Actuary and IBNR Techniques: A Machine Learning Approach. Property.